Understanding ZFS: What is a vdev in ZFS?

A vdev in ZFS, short for virtual device, is a crucial component of the ZFS storage architecture. A zpool, which is the top-level structure in ZFS, consists of one or more storage vdevs and zero or more support vdevs. A storage vdev is a collection of block or character devices arranged in a specific topology, such as single, mirror, RAIDz1/2/3, or DRAID. Each vdev type has its own characteristics in terms of fault tolerance and performance. Additionally, there are support vdev types, including LOG, CACHE, SPECIAL, and SPARE, which provide specific functionality to the zpool. Understanding the role and configuration of vdevs is essential for building resilient storage pools and optimizing performance in ZFS.

Key Takeaways:

- A vdev in ZFS is a virtual device that is a fundamental component of the ZFS storage architecture.

- A zpool consists of one or more storage vdevs and zero or more support vdevs.

- Storage vdevs have different topologies, such as single, mirror, RAIDz1/2/3, or DRAID, each with its own characteristics.

- Support vdevs, including LOG, CACHE, SPECIAL, and SPARE, provide additional functionality to the zpool.

- Understanding vdevs is essential for building resilient storage pools and optimizing performance in ZFS.

ZFS Storage Pool Structure: Exploring Zpools, vdevs, and devices

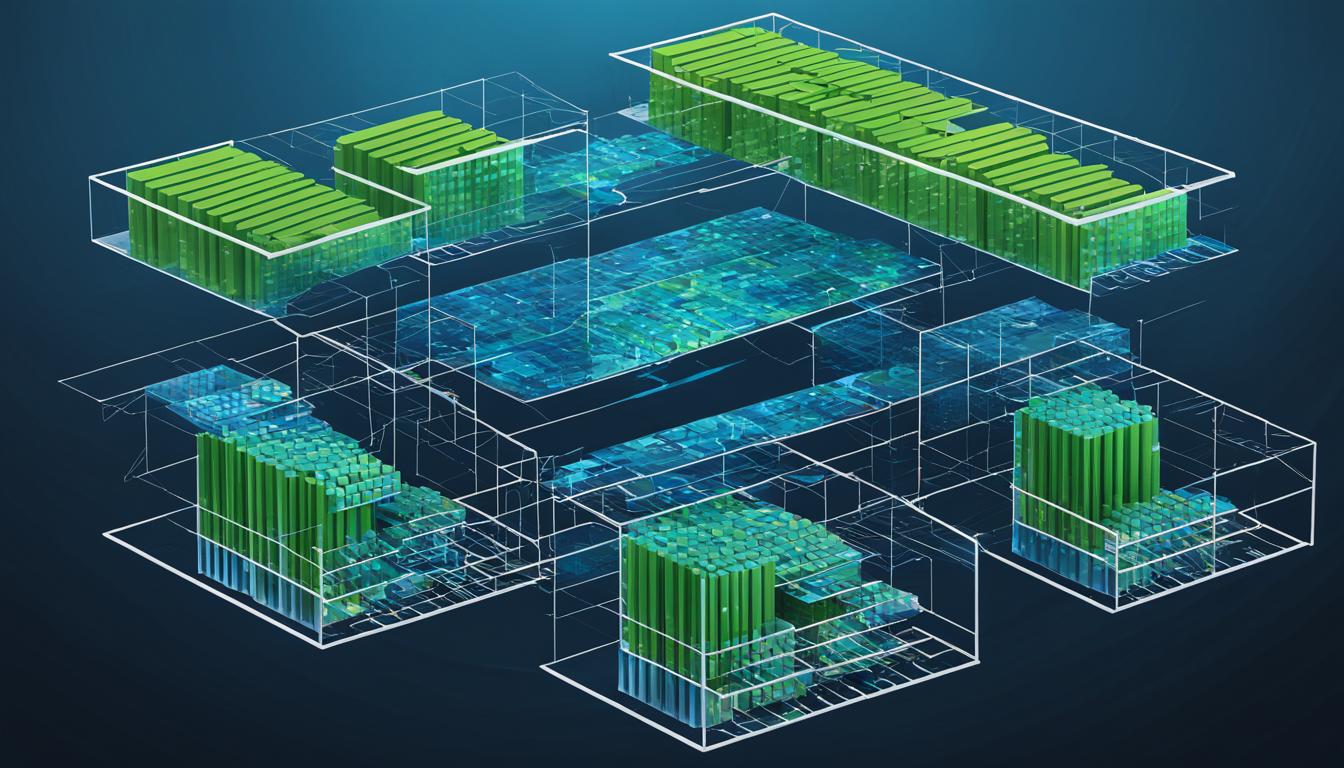

In ZFS, the storage pool structure is composed of three interconnected components: zpools, vdevs, and devices. Understanding the relationship between these elements is crucial for building robust storage systems in ZFS.

A zpool serves as the top-level structure in ZFS, encapsulating one or more vdevs. Each zpool represents a self-contained unit of data storage and cannot share vdevs with other zpools. It is important to note that redundancy in ZFS is implemented at the vdev level, not the zpool level. In other words, if any storage vdev or SPECIAL vdev in a zpool fails, it will result in the loss of the entire zpool and its data. To ensure data integrity, it is essential to configure vdevs with appropriate fault tolerance mechanisms.

A vdev refers to a virtual device within a zpool. It can be a single disk, a mirror (consisting of multiple disks), or a RAIDz configuration with parity. Each vdev contains one or more devices, which can be disks or any block devices that support random access. When constructing a ZFS storage pool, it is recommended to use standard unaltered drives or Host Bus Adapters (HBAs) rather than RAID cards or BIOS RAID for optimal compatibility and performance.

To provide a visual representation:

| Zpool | Vdev | Device |

|---|---|---|

| Zpool 1 | Vdev 1 | Device 1 |

| Vdev 2 (Mirror) | Device 2 | |

| Zpool 2 | Vdev 1 | Device 1 |

| Vdev 2 | Device 2 |

By creating a well-designed zpool structure with the appropriate number and configuration of vdevs, users can achieve the desired level of fault tolerance, performance, and storage capacity in their ZFS-based storage systems.

Understanding ZFS vdev Topologies: Single, Mirror, RAIDz1/2/3

In ZFS, the choice of vdev topology plays a crucial role in determining fault tolerance and performance. ZFS offers several vdev topologies to meet different storage needs. Let’s explore them:

Single vdev

The single vdev topology consists of a single disk. It provides the fastest performance among all the topologies, but offers very little fault tolerance. If the disk fails, the entire pool or dataset becomes inaccessible. This topology is suitable for less critical data or when performance is the primary concern.

Mirror vdevs

Mirror vdevs enhance fault tolerance by storing full copies of data on multiple devices. Each device in the mirror vdev contains the same data, providing redundancy in case of drive failure. The performance of mirror vdevs is comparable to single vdevs for reads but requires additional writes for data duplication. It is ideal for critical data where data integrity is a priority.

RAIDz1 vdev

RAIDz1 is a striped parity vdev topology similar to RAID5. It distributes data and parity blocks across multiple drives, with one parity disk per data stripe. RAIDz1 offers fault tolerance against a single drive failure, providing data integrity and reliable performance. However, multiple drive failures can cause data loss or corruption.

RAIDz2 vdev

RAIDz2 is the most commonly used vdev topology. It enhances fault tolerance by using dual parity disks per data stripe. This means RAIDz2 can withstand the failure of up to two drives without data loss. It provides improved data integrity and is recommended for critical data where extra redundancy is necessary.

RAIDz3 vdev

RAIDz3 is the most fault-tolerant vdev topology, offering triple parity to protect against the failure of up to three drives. It provides the highest level of data integrity and is suitable for critical data that requires maximum redundancy. RAIDz3 sacrifices some performance for enhanced fault tolerance.

When choosing a vdev topology, it’s crucial to consider the level of fault tolerance required and the performance trade-offs. Mirror vdevs provide the highest fault tolerance but come at the cost of increased storage capacity usage. RAIDz1, RAIDz2, and RAIDz3 vdev topologies offer varying levels of fault tolerance and performance, allowing users to select the optimal configuration based on their specific storage needs. Take into account the criticality of data, the cost of storage, and the desired level of redundancy to build a resilient and efficient ZFS storage system.

Exploring ZFS Support vdev Types: LOG, CACHE, SPECIAL, SPARE

In addition to storage vdevs, ZFS provides various support vdev types that offer specific functionality to the zpool. These support vdev types include LOG, CACHE, SPECIAL, and SPARE.

ZFS LOG vdev (ZIL – ZFS Intent Log)

The LOG vdev, also known as the ZFS Intent Log (ZIL), plays a crucial role in improving sync write throughput. It accomplishes this by storing sync writes to a separate device before writing them to the main storage. By buffering the sync writes in the ZIL, the LOG vdev optimizes write performance and ensures data consistency in the event of a system failure or power loss.

ZFS CACHE vdev (L2ARC – Level 2 Adaptive Replacement Cache)

The CACHE vdev, also known as the Level 2 Adaptive Replacement Cache (L2ARC), acts as a read buffer in ZFS. It enhances read performance by caching frequently accessed data, thereby reducing the need to access slower storage devices. The L2ARC improves overall system responsiveness by providing faster access to frequently requested data, reducing latency and improving I/O performance.

ZFS SPECIAL vdev

The SPECIAL vdev is specifically designed to handle metadata writes in ZFS. It can also be configured to store small data blocks efficiently. The SPECIAL vdev provides additional fault tolerance for metadata and small data blocks, ensuring their integrity and availability. By allocating a separate vdev for metadata and small data blocks, the SPECIAL vdev improves the overall performance and resilience of the storage system.

ZFS SPARE vdev

The SPARE vdev serves as a hotspare device in ZFS. It acts as an automatic replacement for a failed device within a vdev, ensuring continuous data integrity and availability. When a device failure occurs, the SPARE vdev is automatically activated to replace the failed device, seamlessly restoring the redundancy of the storage system. The SPARE vdev offers an additional layer of protection against hardware failures, minimizing the risk of data loss and downtime.

Each of these support vdev types contributes to the functionality and efficiency of ZFS storage pools. The combination and configuration of these vdev types can be tailored to meet specific storage requirements, providing a versatile and robust storage solution.

ZFS Datasets and Blocks: Organizing Data in ZFS

In ZFS, data organization revolves around the concept of datasets and blocks. A dataset represents a mounted filesystem with its own properties, including a storage quota that defines the maximum allowable data size. Additionally, ZFS introduces a unique type of dataset called a zvol, which functions as a block device without a filesystem layer. This versatility allows for a wide range of storage configurations based on specific needs and requirements.

On the other hand, blocks are the fundamental units of data storage within ZFS. Each block represents a discrete piece of information that is stored on a single vdev, contributing to the overall dataset. ZFS employs a copy-on-write transactional mechanism, ensuring data integrity and offering advanced features like snapshots and self-healing of corrupted blocks.

ZFS operates at the block level, with data being read or written in sectors. These sectors are the smallest addressable units of data in storage devices. By leveraging this granular approach, ZFS maximizes storage efficiency and reduces fragmentation.

“ZFS revolutionizes data organization with its unique approach to datasets and blocks, providing enhanced reliability, scalability, and performance.”

Data Organization in ZFS

The organization of datasets and blocks in ZFS plays a pivotal role in managing data and leveraging the advanced capabilities of the file system. By structuring data into datasets and utilizing the block-level storage model, ZFS offers several key benefits:

- Efficient space utilization through the copy-on-write mechanism

- Data integrity and self-healing capabilities

- Snapshot functionality for easy data versioning and recovery

- Flexibility in configuring different storage configurations

ZFS Datasets: A Closer Look

Datasets in ZFS are highly flexible and support a wide range of use cases. They can be organized hierarchically, allowing for logical segregation of data, similar to directories in a traditional file system. Furthermore, each dataset can have its own properties and quotas, enabling administrators to enforce storage limits and prioritize resources.

Block-Level Storage in ZFS

The block-level storage model used by ZFS offers several advantages over traditional file systems. By operating at the block level and employing copy-on-write transactions, ZFS minimizes data fragmentation and maximizes storage utilization. Additionally, the ability to read and write data in sectors ensures optimal performance and compatibility with different storage devices.

Overall, understanding the intricacies of ZFS datasets and blocks allows administrators to efficiently manage data, optimize storage utilization, and harness the advanced capabilities of the file system.

OpenZFS vs Oracle ZFS: Key Differences and Features

When it comes to the world of ZFS, there are two main branches: OpenZFS and Oracle ZFS. OpenZFS originated from the original ZFS codebase after Oracle acquired Sun Microsystems. While both branches share the same core code, they have distinct features and priorities.

OpenZFS is the version of ZFS commonly used by individuals and small-scale deployments. It has a strong focus on open-source development and community collaboration. Oracle ZFS, on the other hand, is primarily found in their proprietary ZFS Storage Appliances, which are geared towards enterprise-level deployments.

For the purposes of this article, when we mention ZFS, we are primarily referring to OpenZFS. OpenZFS is supported on various operating systems, including Linux, FreeBSD, and Illumos. It can be readily installed from repositories or through specific distributions that ship with ZFS by default.

By understanding the differences between OpenZFS and Oracle ZFS, users can make informed decisions when it comes to deploying and administering ZFS systems. Let’s take a closer look at some of the key differentiating factors:

- Features: OpenZFS and Oracle ZFS offer a similar set of features, including data integrity, snapshots, copy-on-write, and self-healing capabilities. However, each branch may have unique additional features or enhancements developed by their respective communities.

- Administration: The administration and management tools for OpenZFS and Oracle ZFS may differ, with each branch having its own set of tools and interfaces.

- Filesystem Compatibility: OpenZFS strives for compatibility between different operating systems, allowing for seamless data transfer and portability. Oracle ZFS may have tighter integration with its proprietary hardware and software stack.

Overall, both OpenZFS and Oracle ZFS provide robust storage solutions with advanced capabilities. However, the choice between the two may depend on specific requirements, preferences, and the scale of the deployment.

Building a ZFS Storage Server: Hardware Requirements and Recommendations

When it comes to building a ZFS storage server, you don’t need specialized hardware. ZFS can run on commodity hardware, making it accessible and cost-effective for various storage needs. However, there are specific hardware requirements and recommendations to consider for optimal performance and data protection.

ECC RAM (Error-Correcting Code RAM) is highly recommended for ZFS storage servers as it provides better data integrity by correcting errors in memory. While it’s not mandatory, using ECC RAM can help prevent data corruption and ensure the reliability of your ZFS storage system.

For the storage drives in your ZFS server, it’s best to use standard unaltered drives. Avoid using RAID cards and BIOS RAID setups, as they can interfere with ZFS’s advanced storage management capabilities. By using individual drives, you can take full advantage of ZFS’s data protection features such as data checksumming and self-healing.

The amount of RAM in your ZFS server is an important consideration. ZFS leverages unused memory as a cache through its Adaptive Replacement Cache (ARC) mechanism. More memory allows for a larger ARC, resulting in improved performance through better caching. While ZFS can run on systems with as little as 8GB of RAM, it’s recommended to have a sufficient amount of RAM to accommodate your storage workload and take advantage of ZFS’s caching capabilities.

To summarize, when building a ZFS storage server:

- Consider using ECC RAM for better data integrity.

- Use standard unaltered drives and avoid RAID cards or BIOS RAID.

- Allocate sufficient RAM to maximize ZFS’s caching capabilities.

By following these hardware requirements and recommendations, you can build a reliable and high-performance ZFS storage server that meets your storage needs.

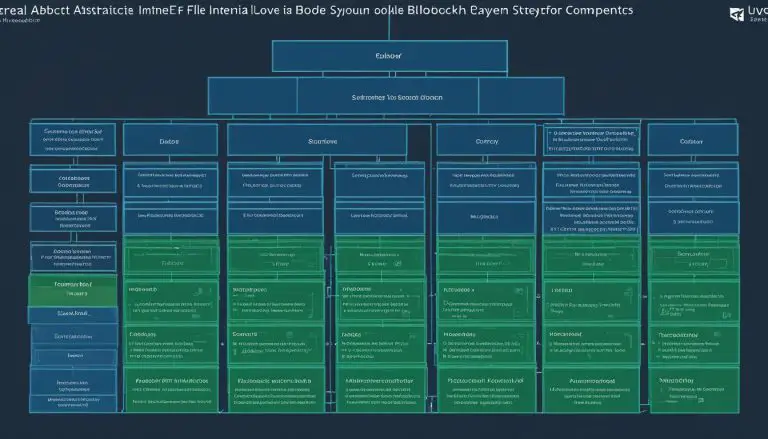

Hardware Requirements and Recommendations Summary:

| Hardware | Requirements/Recommendations |

|---|---|

| ECC RAM | Recommended for better data integrity |

| Storage Drives | Use standard unaltered drives; avoid RAID cards and BIOS RAID |

| RAM | Sufficient amount to maximize ZFS’s caching capabilities |

Remember, building a ZFS storage server doesn’t have to be complicated or expensive. By considering the hardware requirements and recommendations, you can create a reliable and efficient storage system using ZFS.

Installing and Using ZFS: Getting Started Guide

Now that you have a good understanding of ZFS and its components, it’s time to dive into the installation and usage process. ZFS is compatible with various operating systems, including Linux, FreeBSD, and Illumos. The installation can be done through repositories or specific distributions that come pre-loaded with ZFS.

Once you have installed ZFS, you can start setting up your storage. The first step is to create a zpool, which is the top-level structure in ZFS. A zpool consists of one or more vdevs, representing your storage devices, and allows you to aggregate and manage the available storage capacity.

To configure your vdevs, you will decide on the topology that best suits your needs. This can be a single disk, a mirror configuration for data redundancy, or a RAIDz configuration for both redundancy and performance. It’s important to choose the vdev topology based on your storage requirements.

Once you have created your zpool and configured your vdevs, you can create datasets. Datasets are logical containers within the zpool where you can store your data. They can be organized hierarchically, similar to directories in a filesystem, allowing you to manage and organize your data efficiently.

With datasets in place, you can assign properties to fine-tune the behavior of your storage. ZFS offers a wide range of properties, allowing you to enable or disable features such as compression, data deduplication, or atime updates. By tweaking these properties, you can tailor your storage to your specific needs.

Managing and administering your ZFS system is done through the command-line interface (CLI). The ZFS CLI provides a comprehensive set of commands that allow you to create, delete, and modify pools, vdevs, datasets, and properties. Familiarizing yourself with the ZFS CLI is essential for effectively utilizing the power and flexibility of ZFS.

For detailed instructions on ZFS installation, setup, and administration, it is recommended to refer to the OpenZFS or distribution-specific documentation. These resources provide step-by-step guides and comprehensive explanations to help you get started with ZFS.

Now that you have a basic understanding of installing and using ZFS, you are ready to explore its advanced features and capabilities. Whether you are a small-scale user or an enterprise-level administrator, ZFS offers powerful storage solutions that can meet your needs.

Take the time to learn more about ZFS, experiment with different configurations, and discover how it can transform your storage infrastructure.

Sample ZFS Installation and Configuration Commands:

Sample ZFS Command-Line Interface (CLI) Commands:

Sample ZFS Property Settings:

| Property | Value |

|---|---|

| compression | lz4 |

| dedup | off |

By exploring these sample commands and property settings, you can get a better understanding of how ZFS installation and configuration work in practice.

ZFS Performance Optimization: Tuning Parameters and Pool Properties

Optimizing ZFS performance is crucial to ensure optimal efficiency and responsiveness for your storage system. ZFS offers a wide range of tuning parameters and pool properties that can be adjusted to meet the specific requirements of your workload and hardware configuration.

One of the key areas for performance optimization in ZFS is caching. By adjusting the caching parameters, you can control how ZFS utilizes system memory to cache frequently accessed data. The Adaptive Replacement Cache (ARC) is the primary cache in ZFS, and it dynamically adjusts its size based on the available memory. By increasing the ARC size, you can improve read performance by increasing the amount of data that can be stored in memory.

Compression is another performance optimization feature in ZFS. By enabling compression, you can reduce the amount of data that needs to be read from and written to disk, resulting in improved overall performance. ZFS offers different compression algorithms, such as LZ4 and GZIP, each with its own trade-offs between compression ratio and CPU utilization. Choosing the right compression algorithm depends on the nature of your data and the available CPU resources.

Data deduplication, if enabled, can also impact performance in ZFS. Deduplication identifies and eliminates duplicate data blocks, reducing the overall storage footprint. However, deduplication comes at a significant CPU cost, and enabling it without sufficient CPU resources can lead to decreased performance. It’s important to carefully evaluate the benefits and trade-offs of deduplication before enabling it in your ZFS pool.

Tuning Parameters

ZFS provides a variety of tuning parameters that can be adjusted to fine-tune its behavior. These parameters can be modified using the sysctl mechanism or by editing the ZFS configuration file.

Example: To adjust the ARC size, you can modify the “arc_max” parameter, which controls the maximum amount of memory that can be used by the ARC. Increasing the value of “arc_max” allows the ARC to cache more data, improving read performance. However, it’s important to ensure that sufficient memory is available on your system.

Other tuning parameters include “zfs_vdev_max_pending” for controlling I/O concurrency, “zfs_dirty_data_max” for defining the maximum amount of dirty data in the ARC, and “zfs_delay_min_dirty_percent” for controlling how quickly ZFS flushes dirty data to disk. It’s important to consult the ZFS documentation to understand the impact and optimal values of these tuning parameters based on your specific workload and hardware configuration.

Pool Properties

In addition to tuning parameters, ZFS also offers pool properties that can be set at the pool level to optimize performance. These properties control various aspects of data storage and management within the pool.

For example, the “recordsize” property determines the size of the individual data blocks written to disk. Choosing an appropriate record size based on your workload can significantly impact performance. Larger record sizes improve sequential I/O performance, while smaller record sizes are more efficient for random I/O.

The “logbias” property determines the behavior of the intent log (ZIL). By setting the logbias to “throughput,” you prioritize write performance, while setting it to “latency” prioritizes consistent write response time. Choosing the appropriate logbias value depends on the specific requirements of your workload.

ZFS Performance Optimization Summary

Tuning ZFS performance requires a thorough understanding of the available parameters and properties. Adjusting caching parameters, enabling compression, and evaluating data deduplication can significantly improve overall performance. Fine-tuning these settings based on your workload and hardware configuration is essential to achieve optimal results.

By carefully considering the tuning parameters and pool properties, you can optimize your ZFS storage system for maximum performance and responsiveness. Consulting the OpenZFS documentation and specific guides can provide detailed insights and recommendations for applying these performance optimizations.

Challenges and Limitations of ZFS: Licensing, Compatibility, and Kernel Integration

While ZFS offers numerous benefits and advanced features, it also has certain challenges and limitations that users should be aware of. These challenges primarily revolve around licensing, compatibility, and kernel integration.

Licensing Compatibility

One of the notable challenges with ZFS in Linux-based systems is the licensing incompatibility between ZFS (CDDL) and the Linux Kernel (GPL). This licensing mismatch prevents ZFS from being natively integrated into the kernel. As a result, additional steps are required to enable ZFS-on-root or achieve full compatibility with certain Linux distributions.

This licensing issue can impact the ease of deployment and overall compatibility of ZFS on Linux systems. Users should carefully consider the licensing requirements and limitations when selecting a platform or distribution for ZFS deployment.

Kernel Integration and Compatibility Issues

An additional challenge arises from kernel updates, which can result in compatibility issues with the OpenZFS Dynamic Kernel Module Support (DKMS) module. This may necessitate manual updates or custom builds to ensure proper integration and functionality of ZFS.

To mitigate these challenges, it is recommended to stay up to date with the latest developments in ZFS and consult the OpenZFS community for guidance on dealing with licensing and kernel integration issues. By remaining proactive and informed, users can effectively navigate these challenges and optimize their ZFS deployments.

Despite these challenges, ZFS remains a popular choice for data storage due to its comprehensive feature set and reliability. Understanding these limitations and the steps needed to address them will help users make informed decisions and ensure successful implementation of ZFS in their environments.

Conclusion

ZFS is a powerful and feature-rich storage architecture that revolutionizes the way we manage and store data. With its advanced features, including data integrity, snapshots, copy-on-write, and self-healing, ZFS offers unparalleled reliability and data protection. By understanding the concepts of vdevs, ZFS pool structure, and configuration options, users can harness the full potential of ZFS and optimize their storage systems for their specific needs.

Despite certain challenges and limitations, such as licensing and kernel integration issues, ZFS continues to be a popular choice for both small-scale deployments and enterprise-level storage solutions. Its flexibility, scalability, and robustness make it an ideal solution for a wide range of applications.

To make the most of ZFS, it is essential to consider hardware requirements, tuning parameters, and best practices. By aligning the hardware configuration with ZFS recommendations and fine-tuning key parameters, users can create resilient and high-performance storage systems.

In summary, ZFS offers a comprehensive and reliable storage solution that empowers users to manage their data efficiently and securely. With its innovative features and continuous development by the OpenZFS community, ZFS remains at the forefront of storage technology, meeting the demands of modern data management.

FAQ

What is a vdev in ZFS?

A vdev, short for virtual device, is a crucial component of the ZFS storage architecture. It is a collection of block or character devices arranged in a specific topology and is used to store and manage data in ZFS.

How does a ZFS storage pool structure work?

A ZFS storage pool consists of zpools, vdevs, and devices. A zpool is the top-level structure that contains one or more vdevs, each of which contains one or more devices. The data is organized and stored in datasets and blocks within these vdevs.

What are the different types of ZFS vdev topologies?

ZFS offers various vdev topologies, including single, mirror, and RAIDz1/2/3. Each topology has its own characteristics in terms of fault tolerance and performance, allowing users to choose the right level of redundancy based on their storage needs.

What are the support vdev types in ZFS?

ZFS has support vdev types, including LOG, CACHE, SPECIAL, and SPARE. These vdev types provide specific functionality to the zpool, such as improving write throughput, caching frequently accessed data, storing metadata writes, and acting as a spare device to replace failed devices.

How does ZFS organize data in datasets and blocks?

Data in ZFS is organized and stored in datasets, which are analogous to mounted filesystems, and blocks, which represent individual units of data stored on a single vdev. ZFS uses a copy-on-write transactional mechanism, ensuring data integrity and enabling features like snapshots and self-healing of corrupted data.

What are the key differences between OpenZFS and Oracle ZFS?

OpenZFS and Oracle ZFS are two main branches of ZFS. OpenZFS is open-source and commonly used, while Oracle ZFS is typically found in their proprietary ZFS Storage Appliances. They share the same core code but have unique features and priorities.

What are the hardware requirements for building a ZFS storage server?

While specialized hardware is not required, it is recommended to have ECC RAM for better data integrity. Any standard unaltered drives can be used in ZFS, and it’s advised to avoid RAID cards and BIOS RAID. The amount of RAM is important, as it affects the caching and overall performance of ZFS.

How do I install and use ZFS?

ZFS is available on various operating systems and can be installed from repositories or specific distributions that include ZFS by default. Once installed, you can create a zpool, configure vdevs, create datasets, and assign properties using the ZFS command-line interface (CLI).

How can I optimize ZFS performance?

ZFS provides tuning parameters and pool properties that can be adjusted to optimize performance based on specific use cases. By fine-tuning caching, compression, and data deduplication settings, users can optimize ZFS for their workload and hardware configuration.

What are the challenges and limitations of ZFS?

ZFS has certain challenges and limitations, including licensing and compatibility issues with the Linux kernel. Additional steps may be required to enable ZFS-on-root or achieve full compatibility with certain distributions. Kernel updates can also cause compatibility issues with the OpenZFS DKMS module.

What are the key features and benefits of ZFS?

ZFS is a powerful and feature-rich storage architecture that combines filesystem and volume management capabilities. It offers advanced features such as data integrity, snapshots, copy-on-write, and self-healing, making it a reliable choice for data storage.

Source Links

- https://arstechnica.com/information-technology/2020/05/zfs-101-understanding-zfs-storage-and-performance/

- https://forum.level1techs.com/t/zfs-guide-for-starters-and-advanced-users-concepts-pool-config-tuning-troubleshooting/196035

- About the Author

- Latest Posts

Mark is a senior content editor at Text-Center.com and has more than 20 years of experience with linux and windows operating systems. He also writes for Biteno.com